Building My Personal AI Stack in a Homelab — A Journey to Smarter Tools

Ever dreamt of having your own AI stack that you can control, tweak, and build upon — without relying entirely on cloud APIs? That’s what I’ve done with my homelab. This post walks you through the components of my AI stack, how I use it across different tools, and hopefully inspires you to build your own.

🚀 Why I Built a Personal AI Stack#

In a world where most AI tools are cloud-locked and usage-limited, I wanted something private, flexible, and local — an AI assistant I could shape to my needs. Whether I’m brainstorming, coding, automating, or organizing my life — this stack powers it all.

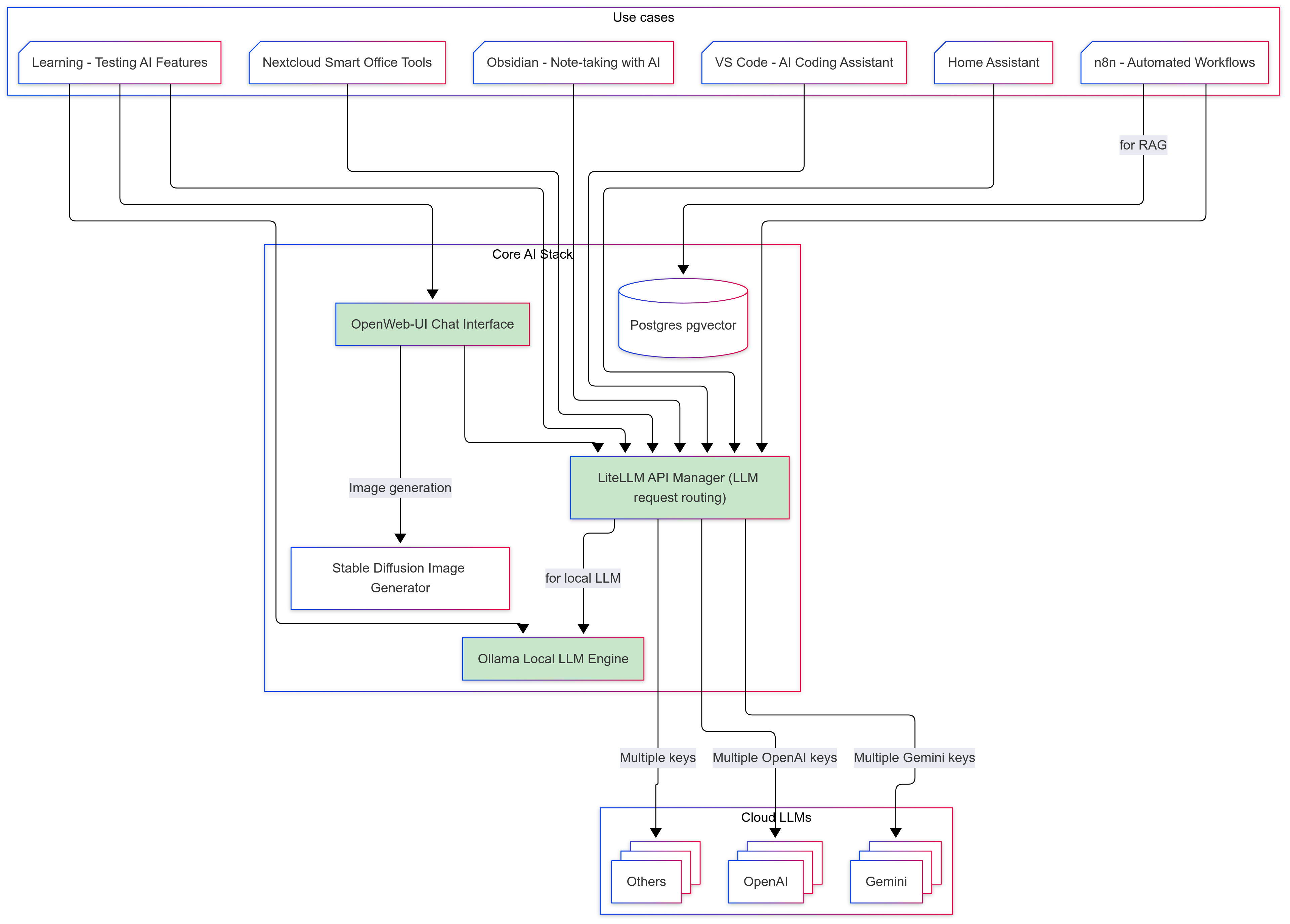

🧩 Architecture Overview#

!

🛠️ Core Components Explained#

🧠 Ollama – Local LLM Runner#

Ollama acts as the engine to run Large Language Models (LLMs) locally on my hardware. It’s optimized, efficient, and supports multiple open-source models like LLaMA, Mistral, and more.

💬 OpenWeb-UI – The Friendly Face#

This is the user interface for chatting with LLMs. It connects to Ollama or routes through LiteLLM. I like it for its clean design, chat history, and plugin support.

🔑 LiteLLM – API Management & Routing#

This server is the smart API orchestrator. It allows:

-

Key & quota management

-

Routing requests between local (Ollama) and cloud providers (OpenAI, Gemini)

-

Load balancing between different models and endpoints

Perfect for managing API usage in a multi-service setup.

🎨 Stable Diffusion – AI Art Generator#

Using local models, I can generate stunning AI images without sending data to the cloud. It integrates well with OpenWeb-UI for seamless text-to-image tasks.

🧠 How I Use This Stack Daily#

✍️ Obsidian – Smart Note-Taking#

With AI-powered plugins, Obsidian connects to my stack to generate content, summaries, and brainstorm ideas. It’s like having a creative co-pilot for journaling and knowledge management.

💻 VS Code – Code with a Brain#

Using the Cline extension, my VSCode connects to the stack for code generation, debugging help, and explanations. It’s like ChatGPT, but self-hosted and customized for my workflows.

🗂️ Nextcloud – Office, but Smarter#

Think Google Docs or MS Office with AI — powered by my own backend. Summarizing documents, writing reports, or generating slides with AI help — all done privately.

🏠 Home Assistant – My Smart Home Butler#

By integrating with Home Assistant, I can interact with my home using natural language:

“Hey Jarvis, how’s the weather?”

“Turn off all the lights and summarize today’s news.”

🔄 n8n – Automated AI Workflows#

This no-code/low-code automation platform connects with my stack to run tasks like:

-

Auto-generating replies

-

Summarizing emails

-

Creating blog outlines from notes

🧪 Experiments#

My AI lab wouldn’t be complete without a test bench. I use my stack to prototype new AI use cases — like PDF summarizers, chatbots, or creative writing tools — quickly and without limits.

🧰 Hardware + Software Stack#

| Component | Details |

|---|---|

| GPU | NVIDIA GTX 1660 Super |

| Host | Linux container (LXC/Docker) |

| AI Support | NVIDIA Docker + CUDA libraries |

| Models | LLaMA, Mistral, OpenAI GPT, Gemini |

| Image Models | Stable Diffusion, SDXL, DreamShaper |

This setup balances power and affordability — and is more than enough for most personal LLM and image generation tasks.

🌟 Final Thoughts#

Building my own AI stack was one of the most empowering things I’ve done in recent years. It gave me:

-

Full control over AI tools

-

Endless ways to innovate

-

A privacy-first way to use generative AI

If you’re into homelabs, automation, or just want to explore AI beyond APIs — this setup is a great place to start. And you don’t need enterprise GPUs to get started — just a bit of curiosity and tinkering spirit.

💡 Inspired to Build Your Own?#

Feel free to copy this architecture, tweak it, or even ask me questions. Your personal AI assistant is just a homelab away.